Adding Virtualization Support

|

Status work in progress to update to F40, improvements from merge https://pagure.io/fedora-server/c/bb66b8d11bd5881147c3b4198a900fd487e67a13?branch=main already included. |

Qemu-kvm in combination with Libvirt management toolkit is the standard virtualization methodology in Fedora. This optionally includes a local virtual network that you may use for protected communication between the virtual guest systems and between the guests and the host. Its default configuration enables access to the public network via NAT, which is useful for virtual machines or containers without direct access to the public network interface.

Preparation

Hardware requirements

QEMU / KVM require hardware virtualization support. The first thing to do is to make sure that it is available.

[…]# grep -E --color 'vmx|svm' /proc/cpuinfoThe command will return one line per cpu core if virtualization is enabled. If not, you should first check in the BIOS whether virtualization is disabled.

Storage set up

Libvirt stores its data including the image files of the virtual hard disk(s) for the guest systems in /var/lib/libvirt. If you adhere to the default partitioning layout, the libvirt application data is stored in its own logical volume that you have to create in advance. You need to specify the size of the storage area, a unique name, and the accommodating VG (fedora_fedora in case of default partitioning). In the new logical volume, create an xfs file system and mount it at /var/lib/libvirt.

Cockpit

The easiest way is to use Cockpit. Start your favorite browser and navigate to your server, named example.com here.

https://example.com:9090If there is no valid public certificate installed so far, a browser warning appears and you have to accept an exception for the self-signed certificate. The subsequent login can use either the root account or an unprivileged administrative user account.

In Cockpit select "Storage" in the left navigation column and then the target volume group in the device list at the top of the right column of the opening window. The center content area changes to show the selected volume group at the top and a list of existing logical volumes below it that may be empty for now.

To create a logical volume select "Create logical volume" next to the 'Logical volumes' section title. In the form that opens, fill in the name of the new logical volume at the top, e.g. in this case 'libvirt'. Leave the usage field as 'File system' and adjust the size at the bottom, e.g. 500 GiB. Then create the LV.

In the 'Logical volumes' list, a new line appears with the LV name, libvirt in this example, as part of the device part on the right side. Expand that line and select 'format' on the right side. In the form that opens, fill in the name of the new file system, e.g. in this case 'libvirt', and the mount point, /var/lib/libvirt in this case. Leave the other fields at their default values. Select 'Format' and Cockpit will handle everything else.

After completion, the file system is immediately available and is also permanently configured in the system accordingly.

Command line

Some administrators may prefer the command line for easy scripting. Create a Logical Volume of appropriate size, 50 GiB in this esample, either in the system Volume Group (named fedora by default) or in the user data VG if created during installation. Adjust size and VG name as required.

[…]# lvcreate -L 50G -n libvirt fedora

[…]# mkfs.xfs /dev/fedora/libvirt

[…]# mkdir -p /var/lib/libvirt

[…]# vim /etc/fstab

/dev/mapper/fedora-root / xfs defaults 0 0

/dev/mapper/fedora-libvirt /var/lib/libvirt xfs defaults 0 0

[…]# mount -aInstalling libvirt virtualization software

Installing the software is quite simple.

[…]# dnf install qemu-kvm-core libvirt virt-install cockpit-machines guestfs-toolsBe sure to install guestfs-tools, not libguestfs-tools (unless you need additional windows guest related software). The package guestfs-tools provides a basic set of various useful tools to maintain virtual disks. Additional packages provide support for specific use cases, e.g. various file systems or forensic support. Use dnf search guestfs to get a list of available packages.

Do not install the group @virtualization onto a Fedora Server. It includes various graphical programs and libraries that are not usable on headless servers.

Next check the SELinux labels

[…]# ls -alZ /var/lib/libvirtUsually, installation sets the SELinux labels properly. Otherwise, set them manually.

[…]# restorecon -R -vF /var/lib/libvirtIf everything is correct, the next step is to activate autostart after re-boot and start KVM and libvirtd.

With Fedora 35 libvirt switched to a modular archtecture (since version 7.6.0-3) while used a single monolithic libvirt daemon up to Fedora 34 (version 7.0.0-x). The installation procedure is the same because the packaging system takes care of the differences. But activation and start up differs as well as installation and configuration of an internal protected virtual network between VMs and the host.

Activation and startup with Fedora 34

Enable automatic startup at boot and start libvirt.

[…]# systemctl enable libvirtd --nowBy default, libvirt creates a (virtual) bridge with an interface virbr0, the IP 192.168.122.1 and the libvirt-internal name as default. In addition, a separate firewall zone libvirt is set up and assigned to the internal interface. Check if everything is running as expected.

[…]# ip a

[…]# firewall-cmd --get-active-zonesActivation and startup with Fedora 35 and up

The libvirt Fedora installation procedure provides systemd startup scripts that take care of enabling and starting the various unix sockets and services as needed. This includes support for qemu, xen and lxc. Configuration of vbox is disabled by default. The drivers determine during startup whether the required prerequisites are met and abort otherwise. The default services, qemu and lxc in case of Fedora Server, are started at boot time. If not used for about one minute they are deactivated, but will restart on demand as soon as a virtual machine is started (either by command line or Cockpit service). There is no need for administrator intervention at all.

The network configuration is slightly different. The services don’t start at boot time, like qemu, lxc, etc., but on demand at first access. Therefore, you won’t get an interface virbr0 until some libvirt service requests it. That’s sometimes inconvenient, e.g. if you use that interface for non-libvirt services, too (e.g. lxd or nspawn container). You may prefer to enable the virt-network service anyway:

-

Optionally, activate libvirt’s internal network

if you are planning to use the virtual network independently from starting virtual machines or you need the virbr0 interface at boot time anyway, enable libvirt’s internal network. Otherwise you may skip this step.

[…]# systemctl enable virtnetworkd.service --nowAlternatively, you may want to completely discard libvirt’s internal network. You’ll take this path if you set up an internal network with NetworkManager tools.

[…]# systemctl disable virtnetworkd.socket --now -

Activate (start) the required libvirt modular drivers

[…]# for drv in qemu interface network nodedev nwfilter secret storage ; \ do systemctl start virt${drv}d{,-ro,-admin}.socket ; doneThe virtualization functionality can be used immediately. An interface virbr0 as well as a dnsmasq server is now available, regardless of when and if a libvirt service is started. The virtnetwork service will terminate if no libvirt service starts up within the first 1-2 minutes (and later restart automatically if needed). But the interface and the dnsmasq server remain regardless.

The virtualization services virtqemud.service, etc will be dormant it no VM has been started as well. But the socket is active and will start the corresponding service on demand.

The boot process now starts virtualization automatically without administrative intervention.

-

Check successful start via a status query

[…]# for drv in qemu interface network nodedev nwfilter secret storage ; \ do systemctl status virt${drv}d{,-ro,-admin}.socket ; done ● virtqemud.socket - libvirt QEMU daemon socket Loaded: loaded (/usr/lib/systemd/system/virtqemud.socket; enabled; preset: enabled) Active: active (listening) since Wed 2024-04-... Triggers: ● virtqemud.service Listen: /run/libvirt/virtqemud-sock (Stream) CGroup: /system.slice/virtqemud.socket Apr 10 13:51:33 example.com systemd[1]: Listening on virtqemud.socket - libvirt QEMU daemon socket. ● virtqemud-ro.socket - libvirt QEMU daemon read-only socket Loaded: loaded (/usr/lib/systemd/system/virtqemud-ro.socket; enabled; preset: enabled) Active: active (listening) since Wed 2024-04-... Triggers: ● virtqemud.service Listen: /run/libvirt/virtqemud-sock-ro (Stream) CGroup: /system.slice/virtqemud-ro.socket Apr 10 13:51:33 example.com systemd[1]: Listening on virtqemud-ro.socket - libvirt QEMU daemon read-only socket. ● virtqemud-admin.socket - libvirt QEMU daemon admin socket Loaded: loaded (/usr/lib/systemd/system/virtqemud-admin.socket; enabled; preset: enabled) Active: active (listening) since Wed ... Triggers: ● virtqemud.service Listen: /run/libvirt/virtqemud-admin-sock (Stream) lines 1-23 ... ...

Adjusting libvirt internal network configuration

The default configuration of the internal network (virbr0) activates just a DHCP Server. If the virtual machines should also be able to communicate with each other and the host, then adding a DNS server is at least very advantageous. It is easier and less error-prone to address VMs and the host by name instead of IP numbers.

The first step is to choose a domain name. A top-level ".local" is explicitly not recommended, nor taking one of the official top-level names. But for example, you can take the official domain name and replace the last, top-level part with 'lan' or 'internal' or localnet. An official domain example.com would translate to an internal domain example.lan. We use that one throughout this tutorial. The host gets the internal name host.example.lan.

Use the libvirt tool to adjust the default network. Replace names and placeholders as required. Delete the line with "forward mode = 'nat'" if you do not want to allow access to the public network via the virtual network.

[…]# virsh net-edit default

<network>

<name>default</name>

<uuid>aaaaaaaa-bbbb-cccc-dddd-eeeeeeeeeeee</uuid>

<bridge name='virbr0' stp='on' delay='0'/>

<mac address='52:54:00:xx:yy:zz'/>

<forward mode='nat'/>

<mtu size='8000'/>

<domain name='example.lan' localOnly='yes'/>

<dns forwardPlainNames='no'>

<forwarder domain='example.lan' />

<host ip='192.168.122.1'>

<hostname>host</hostname>

<hostname>host.example.lan</hostname>

</host>

</dns>

<ip address='192.168.122.1' netmask='255.255.255.0'>

<dhcp>

<range start='192.168.122.2' end='192.168.122.254'/>

</dhcp>

</ip>

</network>Activate the modified configuration.

[…]# virsh net-destroy default

[…]# virsh net-start defaultCheck if the DNS resolution works.

[…]# nslookup host 192.168.122.1

[…]# dig @192.168.122.1 host.example.comAdjusting the hosts DNS resolution configuration

In order for the host to initiate communication with its virtual machines, it must query the name server set up in the previous step for internal domains. So we need Split DNS. The default systemd-resolved DNS client in Fedora is basically split-DNS capable. Unfortunately it currently doesn’t cooperate well with libvirt’s virtual network and needs additional administrative efforts.. and its usage is quite unstable. Therefore we recommend switching to dnsmasq for the time being to provide stable split DNS.

|

The following procedure doesn’t work for Fedora 35-39. Don’t use it with these releases. Switch to dnsmasq for the time being as described in the next chapter instead. |

-

The name resolver service systemd-resolved introduced with Fedora 33 can do this automatically. But libvirt handles its interfaces on its own and must therefore inform systemd-resolved about it. A script in a hook provided by libvirt can take care of this. You have to adjust the local domain name (${example.lan} in the script below) accordingly!

[…]# mkdir -p /etc/libvirt/hooks/network.d/ […]# vim /etc/libvirt/hooks/network.d/40-config-resolved.sh #>--INSERT--<# #!/bin/bash # Add the internal libvirt interface virbr0 to the # systemd-resolved configuration. # Its automatic configuration of systemd-resolved cannot # (yet) detect a libvirt DNS server. network="$1" operation="$2" suboperation="$3" # $1 : network name, eg. default # $2 : one of "start" | "started" | "port-created" # $3 : always "begin" # see https://libvirt.org/hooks.html ctstartlog="/var/log/resolve-fix.log" echo " P1: $1 - P2: $2 - P3: $3 @ $(date) " echo " " > $ctstartlog echo "======================================= " >> $ctstartlog echo " P1: $1 - P2: $2 - P3: $3 @ $(date) " >> $ctstartlog if [ "$network" == "default" ]; then if [ "$operation" == "started" ] && [ "$suboperation" == "begin" ]; then echo " Start fixing .... " >> $ctstartlog resolvectl dns virbr0 192.168.122.1 resolvectl default-route virbr0 false resolvectl domain virbr0 example.lan resolvectl domain virbr0 example.lan echo " .... done " >> $ctstartlog echo " Checking .... " >> $ctstartlog echo " Executing resolvectl " >> $ctstartlog resolvectl status >> $ctstartlog echo " " >> $ctstartlog echo " Executing cat resolve " >> $ctstartlog cat /etc/resolv.conf >> $ctstartlog echo " .... done " >> $ctstartlog fi fi #>--SAVE&QUIT--<# […]# chmod +x /etc/libvirt/hooks/network.d/40-config-resolved.sh -

Check if /etc/resolv.conf is a link and not a file. Activate modified local DNS resolving

[…]# ls -al /etc/resolv.confIn case it is a file, fix it:

[…]# cd /etc […]# rm -f resolv.con […]# systemctl restart systemd-resolved […]# ln -s ../run/systemd/resolve/stub-resolv.conf resolv.conf -

Test the hook file

[…]# /etc/libvirt/hooks/network.d/40-config-resolved.sh default started begin P1: default - P2: started - P3: begin @ Mon Mar ... -

It is useful to modify the host’s search path to resolve a short single hostname to the internal network.

[…]# /etc/libvirt/hooks/network.d/40-config-resolved.sh default started begin P1: default - P2: started - P3: begin @ Mon Mar ... -

Check the functionality of name resolution with internal and external addresses.

[…]# ping host […]# ping host.example.lan […]# ping host.example.com […]# ping guardian.co.uk

+ Everything should work fine now.

Switch to NetworkManager’s dnsmasq plugin

The NetworkManager Dnsmasq plugin is an easy way to add a local caching DNS server which is split DNS enabled. It provides the host with a lightweight, capable DNS server as an alternative to using systemd-resolve. By default it forwards all queries to an external, 'official' DNS server. We just have to configure the local libvirt domain.

Activate dnsmasq plugin

[…]# vim /etc/NetworkManager/conf.d/00-use-dnsmasq.conf

# /etc/NetworkManager/conf.d/00-use-dnsmasq.conf

#

# This enabled the dnsmasq plugin.

[main]

dns=dnsmasqDefine the local domain and DNS service

[…]# vim /etc/NetworkManager/dnsmasq.d/00-example-lan.conf

# /etc/NetworkManager/dnsmasq.d/00-example-lan.conf

#

# This file directs dnsmasq to forward any request to resolve

# names under the .example.lan domain to 192.168.122.1, the

# local libvirt DNS server.

server=/example.lan/192.168.122.1Activate modified local DNS resolving

[…]# systemctl stop systemd-resolved

[…]# systemctl disable systemd-resolved

[…]# rm /etc/resolv.conf

[…]# nmcli con mod enp3s0 ipv4.dns-search 'example.lan'

[…]# nmcli con mod enp3s0 ipv6.dns-search 'example.lan'

[…]# systemctl restart NetworkManagerCheck the functionality of name resolution with internal and external addresses.

[…]# ping host

[…]# ping host.example.lan

[…]# ping host.example.com

[…]# ping guardian.co.ukFinishing Cockpit-machines configuration

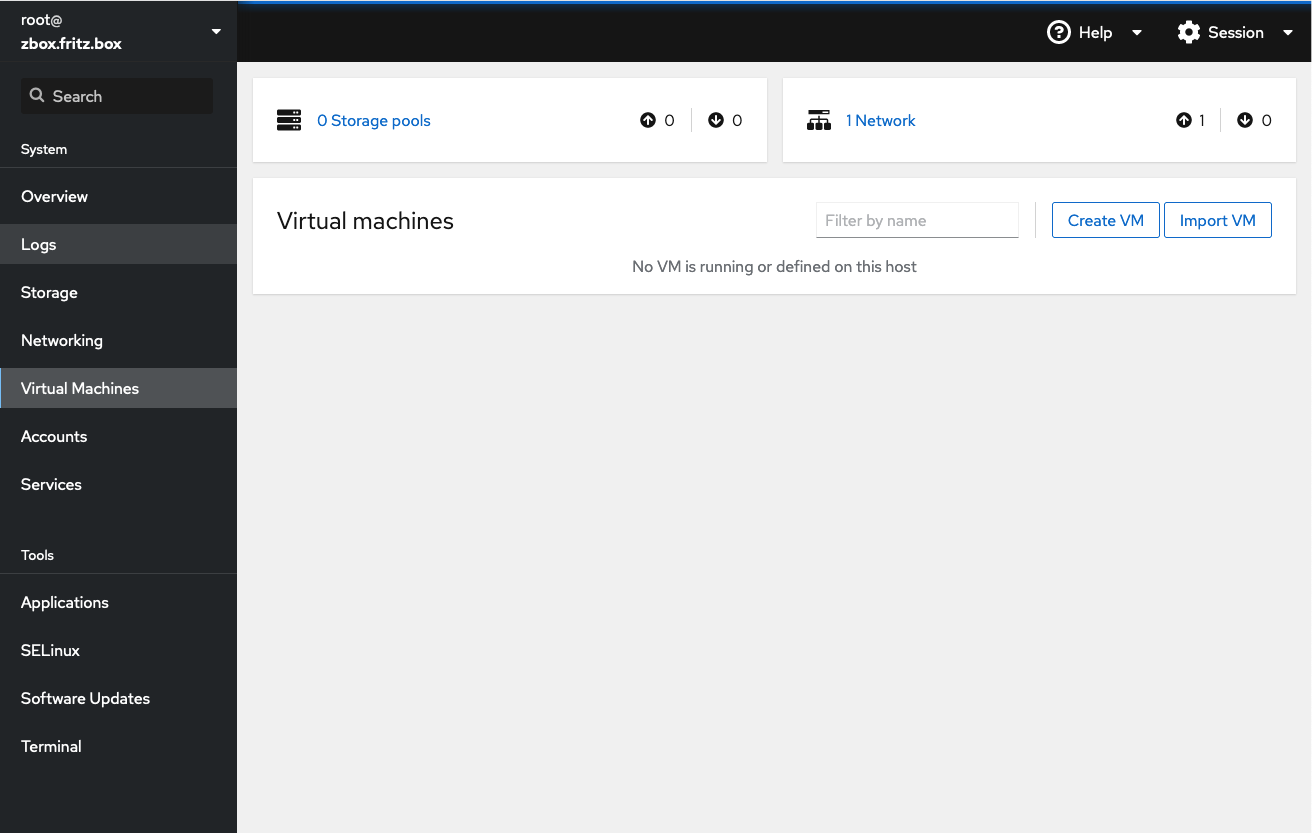

Open your browser and connect to the Cockpit instance of your host server. Consult the post-installation guide to learn about the possible connection paths. Log in as root or with your administrative account. In the overview (start) page select Virtual Machines in the left navigation column.

If there is no entry Virtual Machines in the navigation column, the cockpit-machines module was left off in the installation step above. Select Applications further down and then Machines for installation.

|

Currently, it sometimes takes a long time to display additional installation options. You may install the module with |

Storage pools

When first used, the list of virtual machines displayed in the center of the page is empty, of course. A box left above that list displays 0 Storage pools. Libvirt uses pools to determine the location of typical files. The installer has already created the directories. Only the pools need to be defined here.

Typically you use one Pool for installation media, stored at /var/lib/libvirt/boot. "Installation media" would be a suitable descriptive pool name. Select 0 Storage pools in the box and then Create storage pool. A new form opens.

If you are logged in as an adminstrative user (even if having used sudo su - ), you are asked to select a connection type, "system" or "session". This selection is presented in various configuration forms, so we explain here. Use "system" for production deployments, the common case. Select "session" in the special case of testing, development, and experimentation. The "session" option does not support any custom or advanced networking, but works pretty much everwhere (including containers) and without any privileges. The libvirt project provides additional information for developers. If you are logged in directly as root, this line doesn’t show up. Instead, everything is treated as system; i.e. production deployment (never do development or experimentation as root).

Next enter "Installation media" as the name, "Filesystem directory" as the type, and /var/lib/libvirt/boot as the target path.

In most cases, the (virtual) hard disk used for a virtual machine is a disk image file stored in /var/lib/libvirt/images. Define another pool named "Disk images" accordingly.

Activate both pools in the drop down menu of each pool.

You may create additional pools as needed, e.g. disk images via iSCSI in a SAN or as a logical volume in a volume group (LVM) on the host’s local disk. The latter offers better performance in theory, but the practical gain is usually rather small, if any. We will not go into further detail here for the time being.

Networks

A box on the right above the virtual machines list shows 1 Network and lists the networks managed by libvirt. By default, it contains the internal network default with the interface virbr0. The list does not contain the external interface. It is managed by the server. Nevertheless, it is available for virtual machines.

Completed

Virtualization is now ready to use on the server and you can start setting up guest VMs.

This guide just describes the Fedora specific way to use libvirt. For further information please use the libvirt project documentation.

Want to help? Learn how to contribute to Fedora Docs ›